What happened in Mumbai was a classic "hot flash" event: they’re hard to detect before they happen, and they’re over relatively quickly. There is little to no time to deploy anything and still be relevant once the event has started.

It was that crisis that started two members of the Ushahidi dev community (Chris Blow and Kaushal Jhalla) thinking about what needs to be done when you have massive amounts of information flying around. We're at that point where the barriers for any ordinary person sharing valuable tactical and strategic information openly is at hand. How do you ferret the good data from the bad?

Thus began project "Swift River" at Ushahidi, which for 3 months now has been thought through, wireframed, re-thought and prototyped. Chris and Kaushal started asking, what can we do that most significantly effects quality of information in the first 3 hours of a crisis? And then answered, what if we created a swift river of information that gets quickly edited? Events like US Airways Flight 1549 and the inauguration gave us real-time live events that also had massive amounts of data to test things out on.

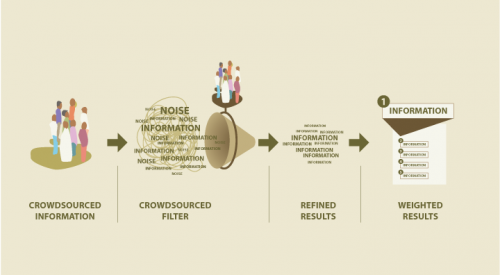

And, after all that, we're not done, but we do have some solid ideas on what needs to be done. We think of it as using a crowd to filter, or edit, the already crowdsourced information coming through tools like Ushahidi, Twitter, Flickr and YouTube. To us, Swift River is "Crowdsourcing the Filter".

Thus began project "Swift River" at Ushahidi, which for 3 months now has been thought through, wireframed, re-thought and prototyped. Chris and Kaushal started asking, what can we do that most significantly effects quality of information in the first 3 hours of a crisis? And then answered, what if we created a swift river of information that gets quickly edited? Events like US Airways Flight 1549 and the inauguration gave us real-time live events that also had massive amounts of data to test things out on.

And, after all that, we're not done, but we do have some solid ideas on what needs to be done. We think of it as using a crowd to filter, or edit, the already crowdsourced information coming through tools like Ushahidi, Twitter, Flickr and YouTube. To us, Swift River is "Crowdsourcing the Filter".

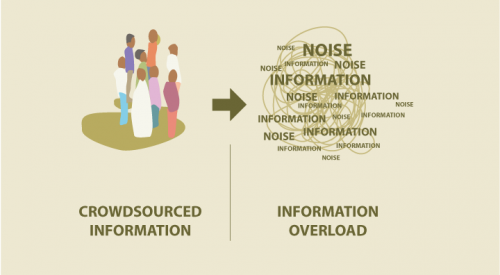

Since we don't believe there will ever be one tool that everyone uses for gathering information on global crisis, we see a future where a tool like Swift River aggregates data from tools such as the aforementioned Twitter, Ushahidi, Flickr, YouTube, local mobile and web social networks. At this point what you have is a whole lot of noise and very little signal as to what the value is of the data you're seeing.

Anyone who has access to a computer (and possibly just a mobile phone in the future), can then go and rate information as it comes in. This is classic "crowdsourcing", where the more people you have weighing in on any specific data point raises the probability of the finding the right answer. The information with greater veracity is highlighted and bubbles to the top, weighted also by proximity, severity and category of the incident.

At this point we have successfully filtered a large amount of data. Something difficult to do with a small team of experts, which can be accomplished by a large number of non-experts and experts combined.

Since we don't believe there will ever be one tool that everyone uses for gathering information on global crisis, we see a future where a tool like Swift River aggregates data from tools such as the aforementioned Twitter, Ushahidi, Flickr, YouTube, local mobile and web social networks. At this point what you have is a whole lot of noise and very little signal as to what the value is of the data you're seeing.

Anyone who has access to a computer (and possibly just a mobile phone in the future), can then go and rate information as it comes in. This is classic "crowdsourcing", where the more people you have weighing in on any specific data point raises the probability of the finding the right answer. The information with greater veracity is highlighted and bubbles to the top, weighted also by proximity, severity and category of the incident.

At this point we have successfully filtered a large amount of data. Something difficult to do with a small team of experts, which can be accomplished by a large number of non-experts and experts combined.

The tool is really quite simple, and can be made better by clustering "like" incidents and reports, rating of the users on proximity, history and expertise and by developing a general protocol so that any other developer can expand on it as well.

The tool is really quite simple, and can be made better by clustering "like" incidents and reports, rating of the users on proximity, history and expertise and by developing a general protocol so that any other developer can expand on it as well.

When the noise is overwhelming the signal, what do you do?

Thus began project "Swift River" at Ushahidi, which for 3 months now has been thought through, wireframed, re-thought and prototyped. Chris and Kaushal started asking, what can we do that most significantly effects quality of information in the first 3 hours of a crisis? And then answered, what if we created a swift river of information that gets quickly edited? Events like US Airways Flight 1549 and the inauguration gave us real-time live events that also had massive amounts of data to test things out on.

And, after all that, we're not done, but we do have some solid ideas on what needs to be done. We think of it as using a crowd to filter, or edit, the already crowdsourced information coming through tools like Ushahidi, Twitter, Flickr and YouTube. To us, Swift River is "Crowdsourcing the Filter".

Thus began project "Swift River" at Ushahidi, which for 3 months now has been thought through, wireframed, re-thought and prototyped. Chris and Kaushal started asking, what can we do that most significantly effects quality of information in the first 3 hours of a crisis? And then answered, what if we created a swift river of information that gets quickly edited? Events like US Airways Flight 1549 and the inauguration gave us real-time live events that also had massive amounts of data to test things out on.

And, after all that, we're not done, but we do have some solid ideas on what needs to be done. We think of it as using a crowd to filter, or edit, the already crowdsourced information coming through tools like Ushahidi, Twitter, Flickr and YouTube. To us, Swift River is "Crowdsourcing the Filter".

How does it work? (non-tech version)

Since we don't believe there will ever be one tool that everyone uses for gathering information on global crisis, we see a future where a tool like Swift River aggregates data from tools such as the aforementioned Twitter, Ushahidi, Flickr, YouTube, local mobile and web social networks. At this point what you have is a whole lot of noise and very little signal as to what the value is of the data you're seeing.

Anyone who has access to a computer (and possibly just a mobile phone in the future), can then go and rate information as it comes in. This is classic "crowdsourcing", where the more people you have weighing in on any specific data point raises the probability of the finding the right answer. The information with greater veracity is highlighted and bubbles to the top, weighted also by proximity, severity and category of the incident.

At this point we have successfully filtered a large amount of data. Something difficult to do with a small team of experts, which can be accomplished by a large number of non-experts and experts combined.

Since we don't believe there will ever be one tool that everyone uses for gathering information on global crisis, we see a future where a tool like Swift River aggregates data from tools such as the aforementioned Twitter, Ushahidi, Flickr, YouTube, local mobile and web social networks. At this point what you have is a whole lot of noise and very little signal as to what the value is of the data you're seeing.

Anyone who has access to a computer (and possibly just a mobile phone in the future), can then go and rate information as it comes in. This is classic "crowdsourcing", where the more people you have weighing in on any specific data point raises the probability of the finding the right answer. The information with greater veracity is highlighted and bubbles to the top, weighted also by proximity, severity and category of the incident.

At this point we have successfully filtered a large amount of data. Something difficult to do with a small team of experts, which can be accomplished by a large number of non-experts and experts combined.

What Next?

So far we have some comps and David created a rough prototype of the engine driving it for the US inauguration. If this type of tool interests you, and you'd like to help, then do let us know. Here's a glimpse at some of the idea flows that spur on our conversation at Ushahidi. This was created by Chris Blow, using the assumption that the user of this tool and protocol was a Twitter user: The tool is really quite simple, and can be made better by clustering "like" incidents and reports, rating of the users on proximity, history and expertise and by developing a general protocol so that any other developer can expand on it as well.

The tool is really quite simple, and can be made better by clustering "like" incidents and reports, rating of the users on proximity, history and expertise and by developing a general protocol so that any other developer can expand on it as well.